17.1 Introduction and functions of iron

17.1.1 Distribution of body iron in men and women

Table 17.1 shows the amount of iron and its distribution in the body in males and females. Trace amounts of iron are also associated with electron transport and several enzymes. Examples include the heme-containing cytochromes that serve as electron carriers within the cell, iron-sulfur proteins (flavoproteins, heme-flavoproteins) that are required for the first reaction in the electron transport chain, and hydrogen peroxidases (e.g., catalase and peroxidase).| Amount of Iron | Male (mg) | Female (mg) |

% of Total |

|---|---|---|---|

| Total | 3000‑4000 | 2000‑3000 | 100 |

| Hemoglobin | 2400 | 1700 | 65 |

| Intracellular storage (ferritin and hemosiderin) | 1000 | 500 | 30 |

| Myoglobin | 150 | 120 | 3.5 |

| Transferrin- bound iron | 4 | 3 | 0.1 |

17.1.2 Absorption and metabolism of iron

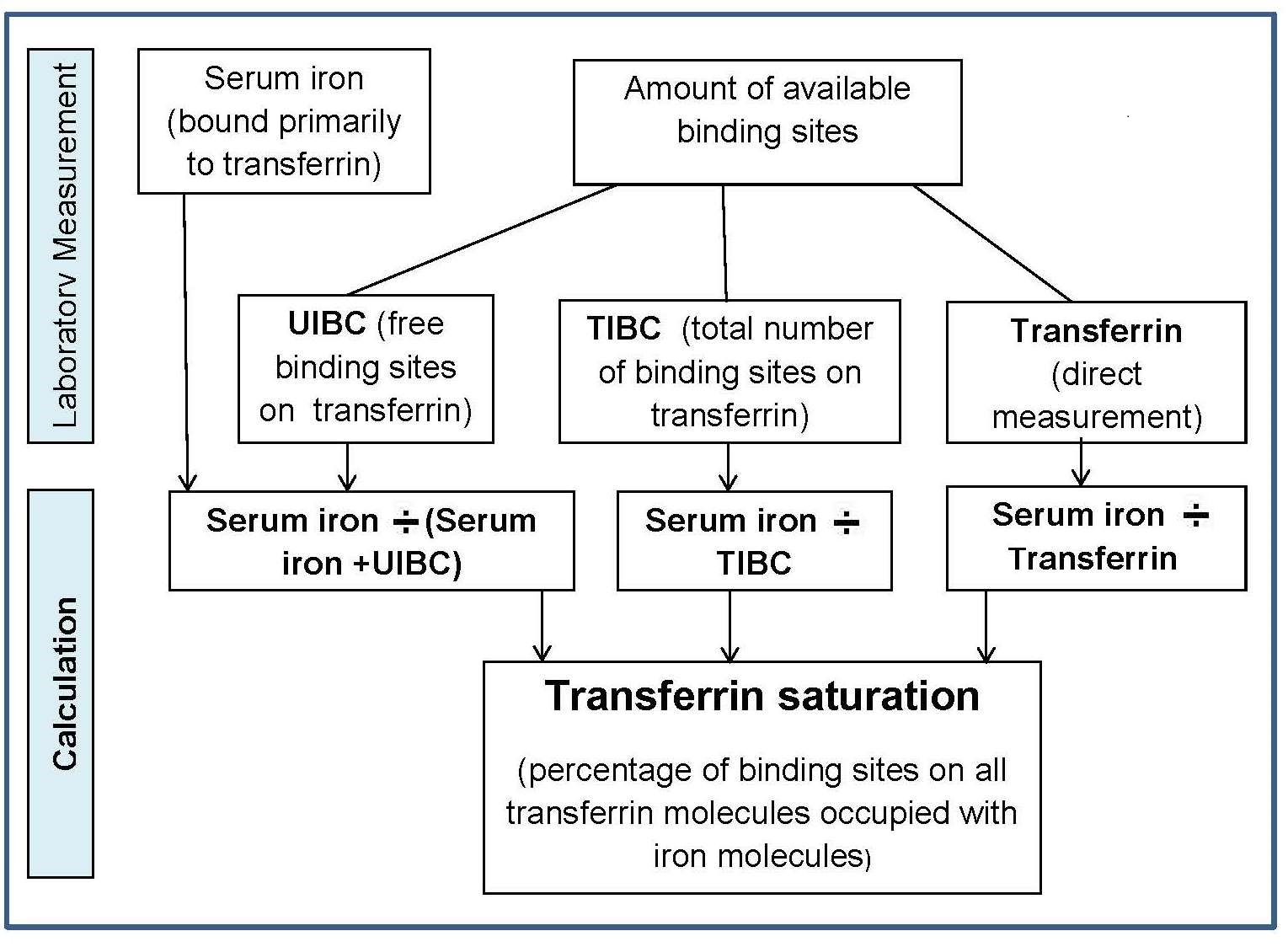

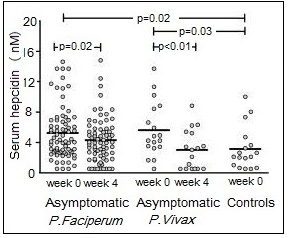

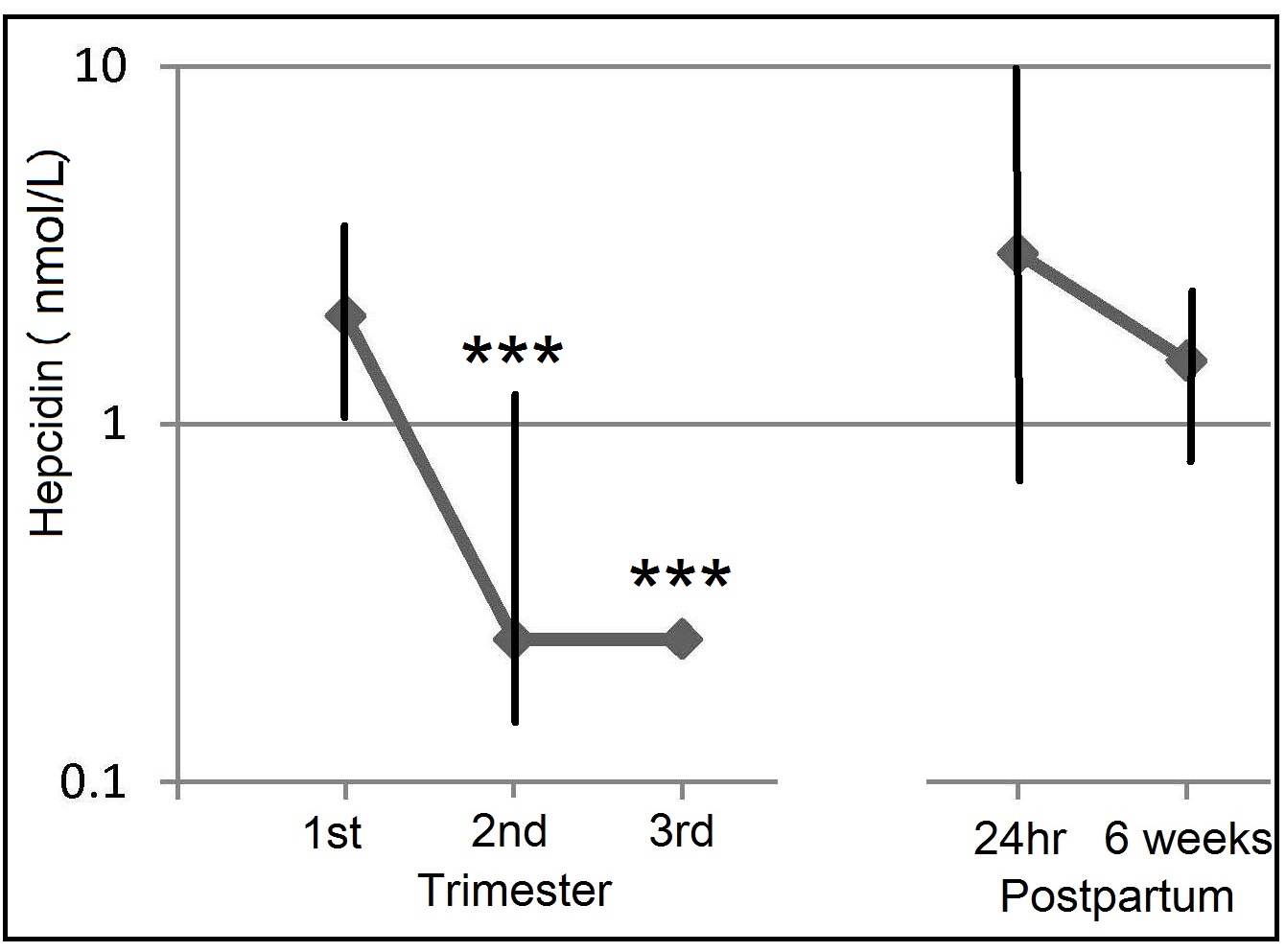

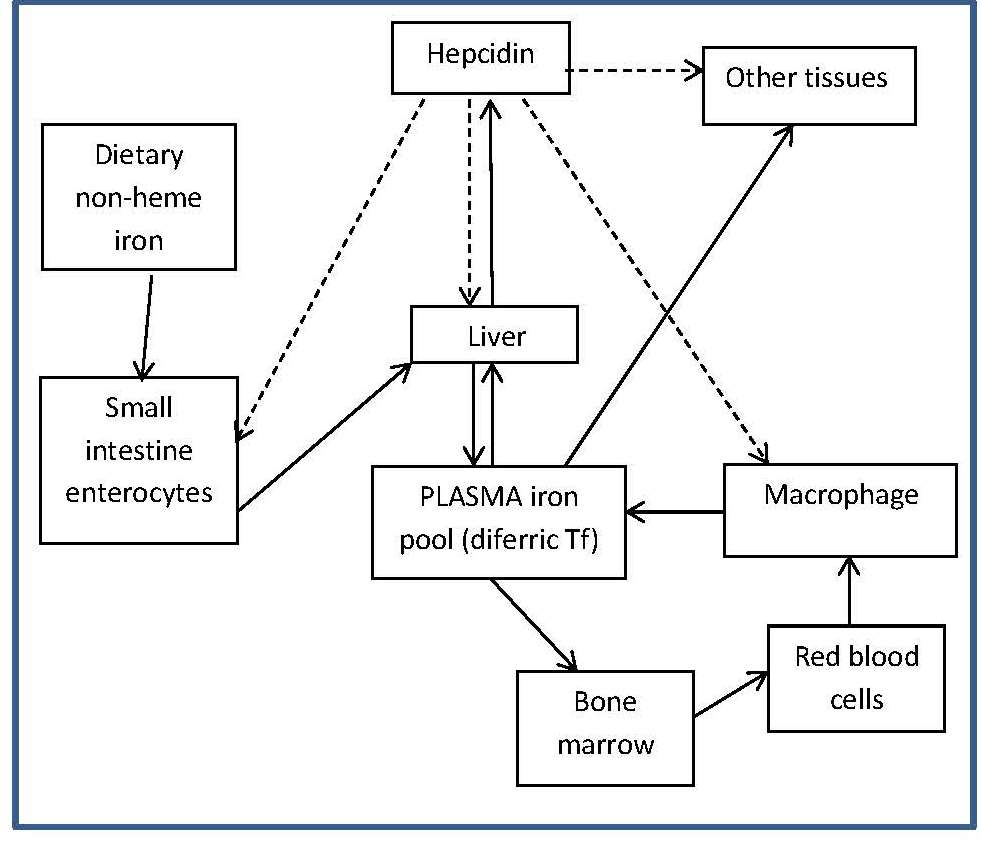

Four main factors in the body operate to maintain iron balance and prevent iron deficiency and iron overload. These are: (a) intake, (b) absorption (c) storage, and (d) loss of iron. The interrelationship of these factors has now been described mathematically, so that the amount of storage iron can be predicted as long as iron losses and bioavailability of iron are known (Hallberg et al., 1998). In an iron-sufficient adult male, absorption of iron from the diet only contributes about 1mg/d, while for menstruating women, about 1.5mg/d is absorbed to compensate for menstrual blood loss. There are two different forms of iron in the diet, heme and nonheme, each absorbed primarily in the duodenum. Heme iron is derived mainly from Hb and myoglobin in red meat, poultry, and fish and is absorbed readily as the intact iron porphyrin. Absorption of heme iron ranges from 15‑35% and is little affected by dietary factors; absorption is regulated primarily in response to total body iron. As demand for body iron increases there is a corresponding upregulation in the uptake of heme iron and also in the rate of transfer to the duodenal enterocytes. Details of the exact mechanism controlling heme iron absorption are poorly understood (Anderson and Frazer, 2017). However, once absorbed, iron (Fe2+) is released from heme iron by heme oxygenase, after which the iron follows the same pathway as nonheme iron, as described below (EFSA, 2015). Nonheme iron (i.e., inorganic iron) is found primarily in plant-based foods, but also in meat, eggs, and some dairy foods. Liver, iron-fortified foods, and the seeds of legumes, such as beans, contain nonheme ion in the form of ferritin, although the mechanism whereby ferritin iron is absorbed is poorly understood (EFSA, 2015). Plant ferritin is readily released during cooking and digestion (Lynch et al., 2018). The absorption of nonheme iron is less efficient than heme iron and can range from 2‑20%, depending on the iron status of the individual and the simultaneous ingestion of other dietary components; some inhibit and others enhance nonheme iron absorption. Most of the food components classed as inhibitors generally act by binding iron in the gastrointestinal tract, preventing its absorption. In contrast, the enhancers act by forming complexes that can be taken up by the intestinal iron transport proteins, thus preventing the iron from binding to the inhibitors. Alternatively, they act by reducing the reactive ferric (Fe3+) iron to its less reactive and more soluble ferrous (Fe2+) state. Inhibitors of nonheme iron absorption include phytic acid, polyphenols, calcium, and peptides from partially digested proteins (e.g., soybean protein). Of these, phytic acid (myoinositol hexa-phosphate, IP6) is the main inhibitor of nonheme iron absorption from plant-based diets. However, traditional food processing methods such as soaking, germination, and fermentation can lower the phytic acid content, either by reducing the water-soluble phytate content, or by phytase hydrolysis converting the phytic acid to lower myo-inositol phosphate forms that no longer inhibit nonheme iron absorption (i.e., IP2; IP1) (Gibson et al., 2018). Polyphenol compounds from beverages such as tea, coffee, cocoa, and red wine, vegetables (spinach, aubergine), legumes (colored beans), and cereals such as red sorghum, also inhibit nonheme iron absorption. Their inhibitory effect is dose-dependent, the strength depending on the structure of the phenolic compounds, with the gallate-containing tea polyphenols having the largest inhibitory effect (Hurrell et al., 1999). Calcium inhibits both nonheme and heme iron absorption, although the inhibitory effect is weak and short-term, with a mixed effect on iron status and no reduction in Hb (Abioye et al., 2021). The mechanism is not well understood (Lynch et al., 2018). In contrast, ascorbic acid and muscle tissue from meat, poultry, fish and liver, enhance nonheme iron absorption when eaten in meals containing inhibitors (Lynch et al., 2018). Of these, ascorbic acid is the most potent enhancer of nonheme iron absorption through its ability to convert ferric (Fe3+) iron to ferrous (Fe2+) iron at low pH as well as its chelating properties (Conrad and Scade, 1968). The enhancing effect of ascorbic acid is dose-dependent and most marked when consumed with meals containing high levels of inhibitors, including phytate and polyphenols. Ascorbic acid can also enhance absorption of many iron fortification compounds (Hurrell et al., 2004), except NaFeEDTA (Troesch et al., 2009). The mechanism whereby muscle tissue enhances nonheme iron absorption is not clear. It may be linked to cysteine-containing peptides released during digestion, which have the potential to reduce ferric (Fe3+) iron to ferrous (Fe2+) iron and chelate iron as described for ascorbic acid (Taylor et al., 1986). The intestinal absorption of nonheme iron has been extensively studied; for details see Anderson and Frazer (2017). Briefly, the nonheme iron in foods that exists predominantly as ferric (Fe3+) iron is solubilized in the gastrointestinal lumen, where first it is reduced by duodenal cytochrome b reductase (DCYTB) to ferrous iron (Fe2+) before being transported by the iron-import protein divalent metal-ion transporter 1 (DMT1) into the enterocytes of the small intestine. When demand for iron is low, the iron can be stored within the cell as the iron storage protein ferritin. When the demand for body iron is high, iron is either taken up by mitochondria for the synthesis of heme, or exported into the circulation by the iron export protein ferroportin 1 (FPN1). To leave the cell and bind to plasma transferrin, however, Fe2+ must be oxidized back to Fe3+. This oxidation is catalyzed by a membrane-bound ferroxidase hephaestin. Transferrin-bound iron is then distributed via the circulation throughout the body to sites of utilization (Figure 17.1).

DCYTB, duodenal cytochrome b;

DMT1, divalent metal-ion transporter 1;

FPN1, ferroportin1;

diferric Tf, diferric transferrin.

Modified from Anderson and Fraser (2017) and Kondaiah et al. (2019).

17.1.3 Micronutrient interactions with the potential to affect iron absorption and/or metabolism

Deficiencies of iron, vitamin A, iodine, and zinc are major public health problems in low-income countries. They often occur concurrently, especially among infants, young children, and women of reproductive age living in impoverished settings. These high-risk groups all have high micronutrient requirements, but frequently low intakes of poorly bioavailable micronutrients. Interactions have been described between these micronutrients, although the mechanisms are not always fully understood. Iron-vitamin A interactions. Vitamin A may affect several stages of iron metabolism, including erythropoiesis, incorporation of iron into Hb, and the mobilization of iron from ferritin stores (Zimmermann et al., 2006). Vitamin A is also said to affect iron absorption, but whether the effect is determined by vitamin A and/or iron status is uncertain (Hurrell and Egli, 2010). Reports of a positive impact of vitamin A on Hb and some iron status indices have been observed in several vitamin A supplementation / fortification trials (Michelazzo et al., 2013), although the precise mechanism remains uncertain. Based on these reports, vitamin A deficiency is now recognized as a contributor to anemia, especially in children (Calis et al., 2008). Iron-iodine interactions. Numerous animal studies indicate that iron deficiency with or without anemia impairs thyroid metabolism (Hess et al., 2002). An iron-dependent enzyme, thyroperoxidase, acts by catalyzing the two initial steps in thyroid hormone synthesis (Hess et al., 2002). Iron deficiency may also reduce thyroid hormone synthesis by inducing alterations in the thyroid hormone feedback system (Lynch et al., 2018). In intervention studies in Morocco and the Ivory Coast, administration of both iron and iodine to iron-deficient goitrous children decreased goiter rates more effectively than did the administration of iodine alone (Zimmerman, 2002). Iron-zinc interactions. Iron and zinc appear to compete for absorption, possibly via the shared divalent metal transporter 1 (DMT1) and/or via transporters in the apical membrane of small intestine enterocytes; the mechanism remains uncertain (Kondaiah et al., 2019). This inhibitory effect only occurs when therapeutic doses (2‑3‑fold molar excess or higher, iron relative to zinc) are given to humans in aqueous solutions but not in a complex food matrix (Olivares et al., 2012). To date there is no evidence that this interaction is associated with any clinically significant adverse effects (Fischer-Walker et al., 2005; Lim et al., 2013), obviating concerns regarding the use of both iron and zinc supplements in public health programs. For a comprehensive review of the possible mechanisms for this iron-zinc interaction, see Kondaiah et al. (2019). Iron-cadmium and iron-lead. Iron deficiency is a risk factor for increased concentrations of cadmium and lead in the blood. For cadmium, this is likely due to increased absorption arising from the elevated levels of divalent metal transporter 1 (DMT) that occur in iron deficiency, but for lead, the mechanism is uncertain (EFSA, 2015).17.1.4 Deficiency of iron in humans

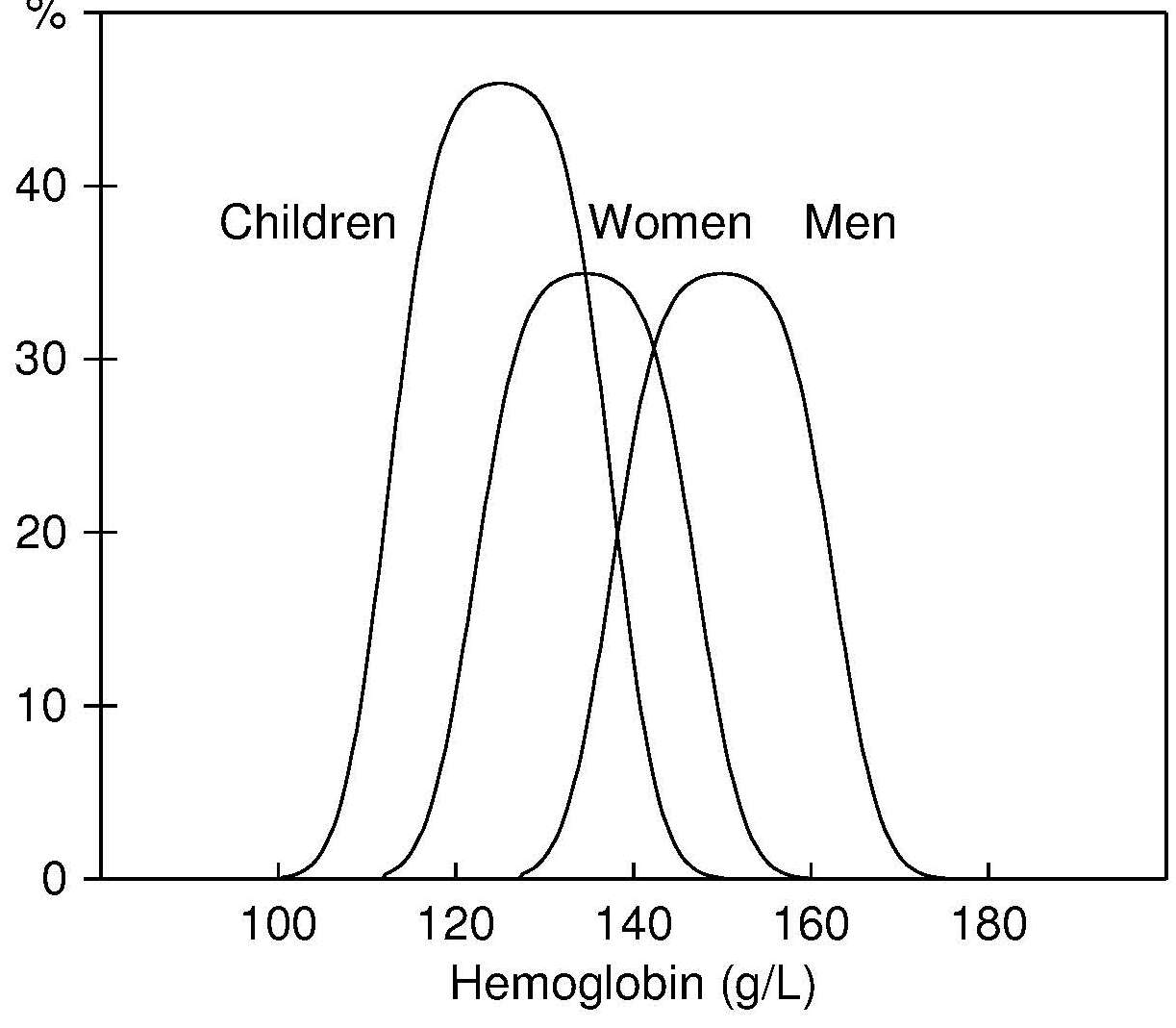

Iron deficiency may arise from inadequate intakes of dietary iron, poor absorption, excessive iron losses, or a combination of these factors. Individuals at greatest risk for iron deficiency include those with high iron requirements for growth (i.e., infants, children and pregnant women), and women of child bearing age with high menstrual losses. Several risk factors have been identified. In industrialized countries, prematurity or low-birthweight, the use of a non-iron-fortified formula, the introduction in young children to cow's milk in the first year of life, and exclusive breastfeeding after 6mos without the regular feeding of iron fortified complementary foods, are all frequently observed causal factors. Among pregnant women, diets low in iron-rich foods, a short interpregnancy interval, and increasingly, obesity, are implicated (Kemper et al., 2017). Additional contributing factors associated with an increased risk of non-anemic iron deficiency among women of child-bearing age with high menstrual losses may include low intake of flesh foods, recent blood donations, nose bleeds, and low body mass index (Heath et al., 2001). In some impoverished settings, additional factors causing iron deficiency may include: bleeding arising from exposure to parasitic helminths (e.g., hookworm) or schistosomiasis, impaired absorption from infection / inflammation or possibly chronic Helicobacter pylori gastritis. In rare cases, genetic disturbances in iron homeostasis arising from genetic mutations, such as in the TMPRSS6 gene, may result in iron-refractory iron deficiency anemia (i.e., iron deficiency that is resistant to oral iron therapy) (Lynch et al., 2018). Anemia is the most common symptom of iron deficiency. In the past, angular stomatitis, glossitis, sideropenic dysphagia, and koilonychia (spoon nails) were also considered physical manifestations of iron deficiency, although rarely observed today.| Consequences | |

|---|---|

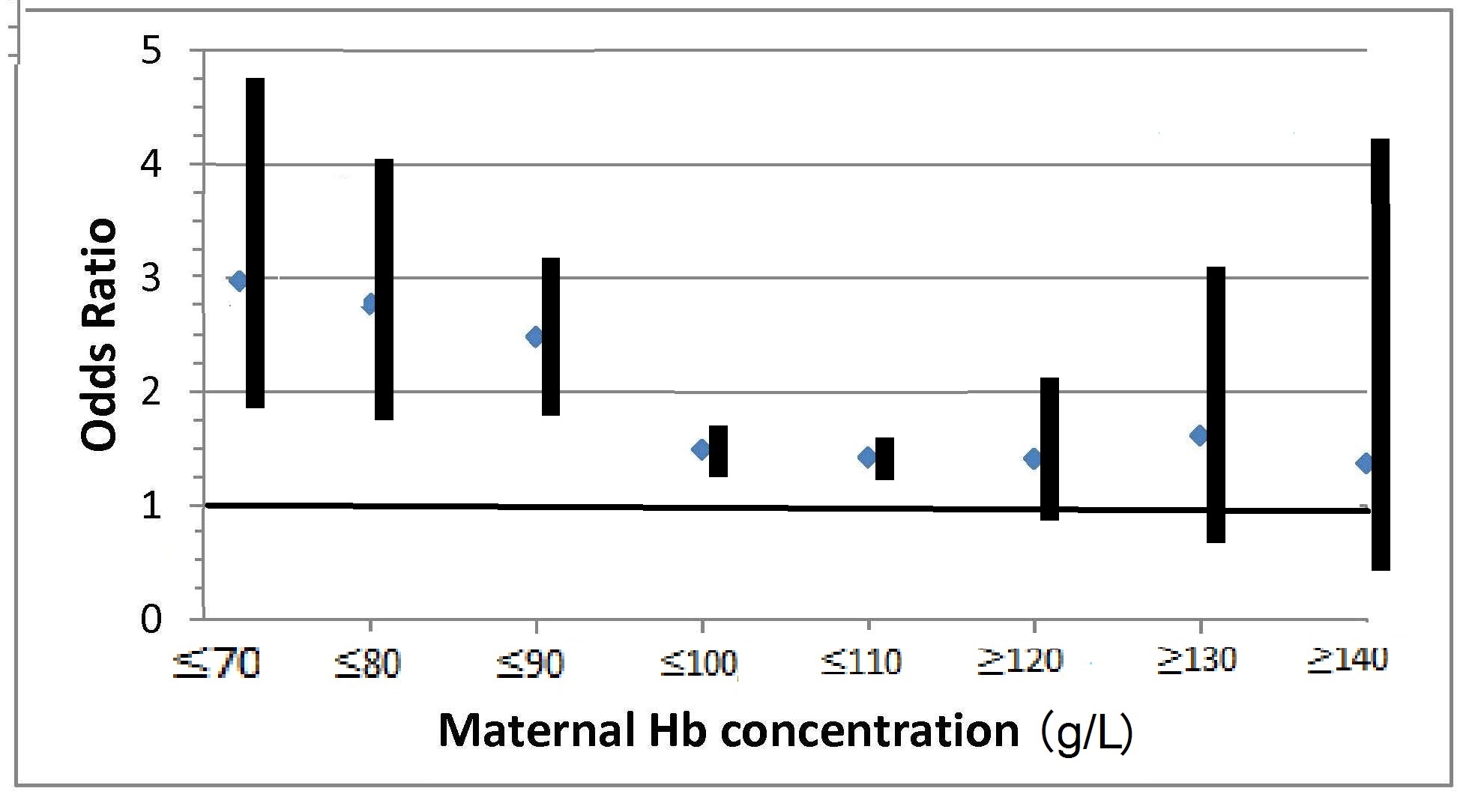

| Pregnancy | Increased risk of anemia and maternal morbidity Increased risk of prematurity and lower birth weight Higher infant mortality |

| Infants and young children | Motor and cognitive developmental delays in infancy Effects on emotional maturation Poorer academic achievement in school-age children who were iron deficient in early childhood Increased risk of severe morbidity from malaria in children < 5y Increased prevalence and duration of upper respiratory tract infections in children |

| All ages | Impaired physical performance and earning capacity Suboptimal response to iodine in populations with endemic goiter and increased risk of impaired thyroid function in the presence of iodine deficiency Increased risk of chronic lead poisoning in high-lead environments Increased risk of “restless leg syndrome” |