Kirkpatrick S. Validity in

Dietary Assessment

3rd Edition December, 2025

Abstract

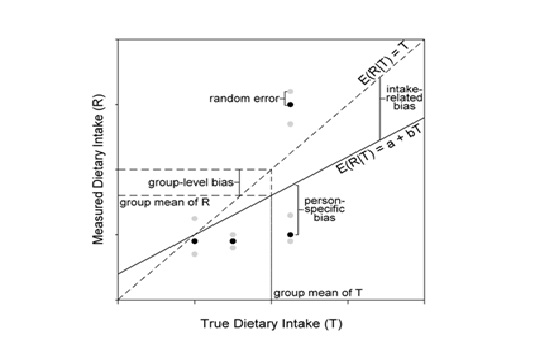

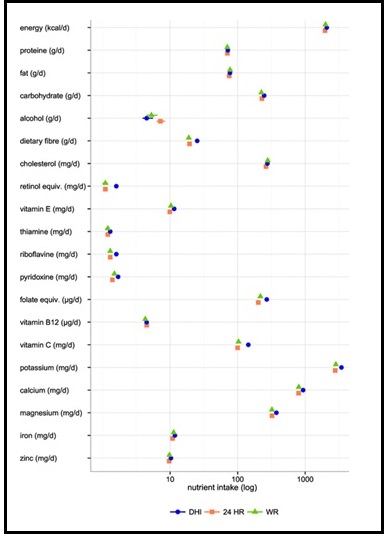

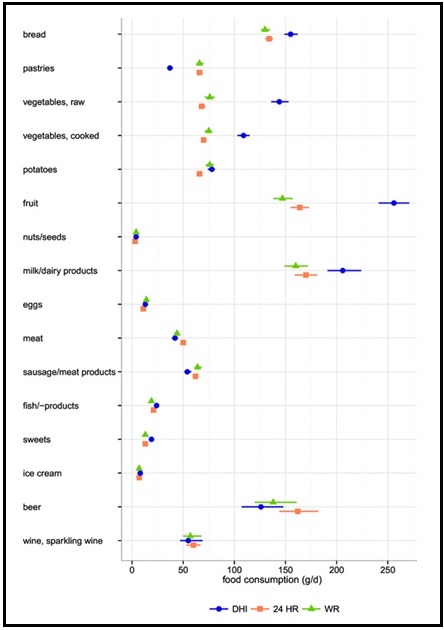

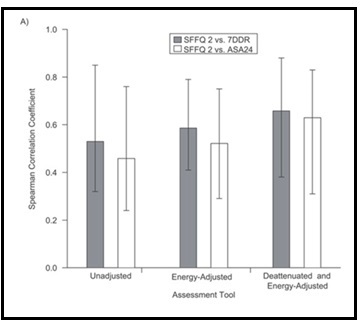

Validation describes the process to determine whether a method provides useful information for a given purpose and context. In developing or adapting a dietary assessment method, face and content validity may be assessed with members of the target population and experts in the field to inform and refine the method. Once a method has been developed, it is critical to consider the extent to which it has construct and criterion validity for a given purpose and population. Construct validity indicates whether the method measures what it is intended to measure, whereas criterion validity refers to the method's accuracy in doing so. Criterion validity is best assessed by comparison of the method under evaluation to an unbiased measure. However, because of the logistical and financial challenges in implementing unbiased measures of dietary intake, especially in large studies, the method being evaluated may be compared to another error-prone measure, usually a self-report method such as weighed food records or multiple 24h recalls. The concept of validity operates on a continuum. Consequently, a given study does not serve to indicate that a method is valid or invalid but rather, provides evidence that a dietary assessment method has a sufficient level of validity for a given purpose. Inferences about the degree of validity should be specific to the population(s) and context(s) studied; for example, a method found to have a certain level of validity for use with adults may not have the same level of validity when used with adolescents. Furthermore, the full body of evidence available about a method, including evidence on different types of validity and using different study designs, should be considered when making decisions about whether a method has adequate validity for a given purpose and in interpreting the data collected. Understanding the extent of the validity of various methods also helps identify strategies to improve dietary assessment. This chapter discusses the evaluation of the validity of dietary assessment methods, with a focus on the evaluation of criterion validity using error-prone and unbiased reference measures. CITE AS: Kirkpatrick S, Validity in Dietary Assessment https://nutritionalassessment.org/Validity/Email: skirkpat@uwaterloo.ca

Licensed under CC-BY-4.0

( PDF )